Recently I got a comment on my first Blazor and WordPress blog post that my demo wasn’t working. I hadn’t looked at it in months, but sure enough my weather forecast component wasn’t rendering. Blazor was working, but it was defaulting to ‘route not found’ condition.

I have learned a bit more since my original experiment, so I dug back into my demo and made some improvements.

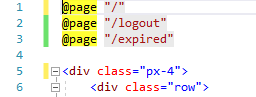

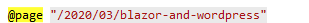

In my first version, I used the router, and set my @page directive to match the url of the blog post.

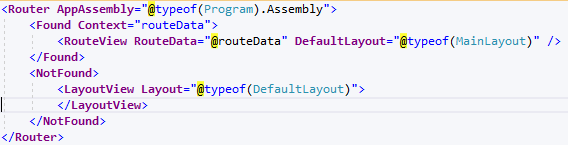

For some reason the router was no longer recognizing that route. I assume some wordpress upgrade messed with the url rewriter, but I didn’t look into that closely – I decided that was the wrong approach. Instead, I just updated the router (my app.razor file) to rely on the notfound condition:

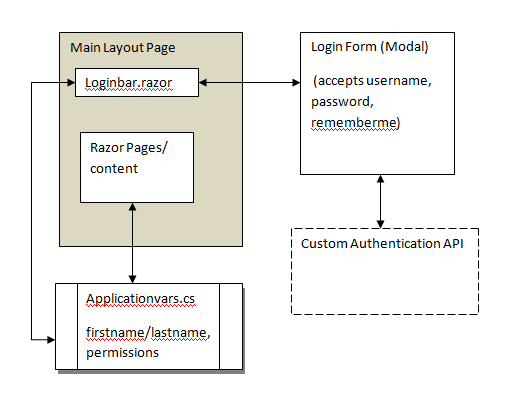

Now my app has no routes defined, and I rely on my defaultlayout.razor page to always render. Note that I can likely get rid of the <Found condition in the router – I didnt only because it was working and I didn’t want to mess with it anymore.

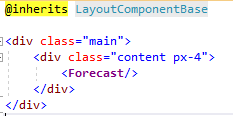

So my DefaultLayout.Razor file is pretty simple:

All it essentially does is render my weather forecast component. I think this is a much better pattern to use when embedding Blazor in WordPress. If you do need to deal with routing, my recommendation would be to either sniff the url in your blazor app and decide what to do, or just control the state of your components by setting variables that cause components to either show or hide.

A couple other thoughts:

- You may have to add mime types to your WordPress server if your app doesn’t work or you get console errors. Take a look at the web.config that gets generated when you publish, and you will see the ones you need. If you manage the wordpress hosting server you can add them in on your admin panel. If not, there are some wordpress plugins that allow you to manage mimetypes.

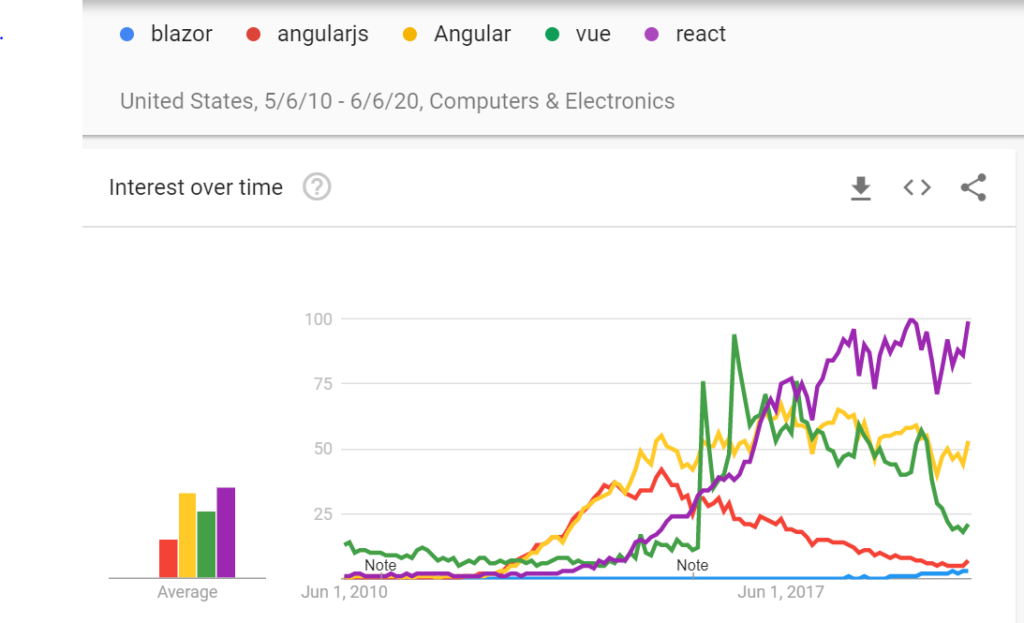

- I am beginning to wonder if embedding blazor in wordpress is the right architecture. The other option is build a blazor app that calls the wordpress API – and just have it pull all the wordpress content into your standalone blazor app. That way you can still maintain the content in wordpress, but you have the full flexibility of blazor routing. If you don’t change your themes a lot, and you don’t require a lot of plugins, this approach might be better. Just a thought. SEO would be an issue in this approach though, since search engines don’t appear to index Blazor apps.

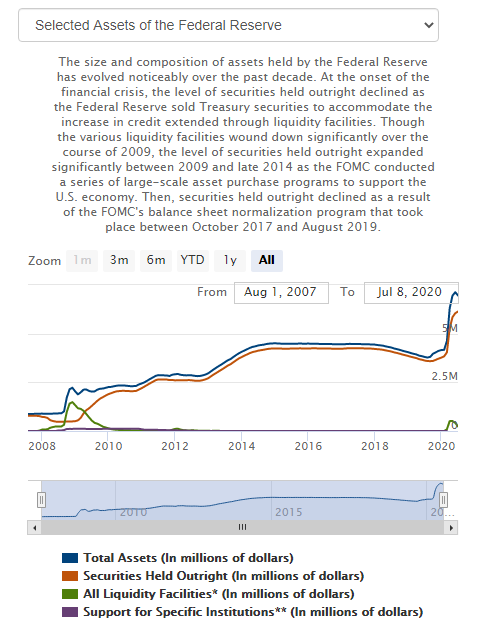

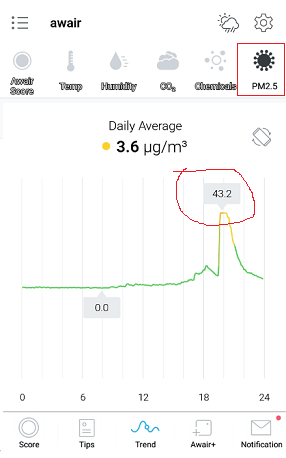

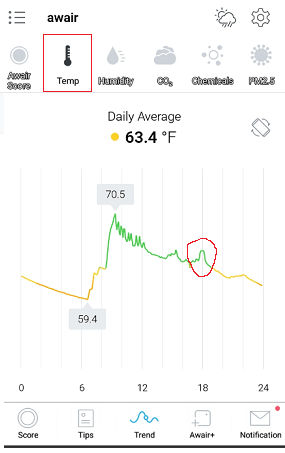

For reference, below is the working example of the Blazor rendered weather forecast:

I am surprised there is not more chatter on the internet about integrating WordPress and Blazor – its a pretty interesting solution to quickly adding components to a WordPress site. If you follow the instructions from my previous post, along with the things I learned above, you can easily get it set up.